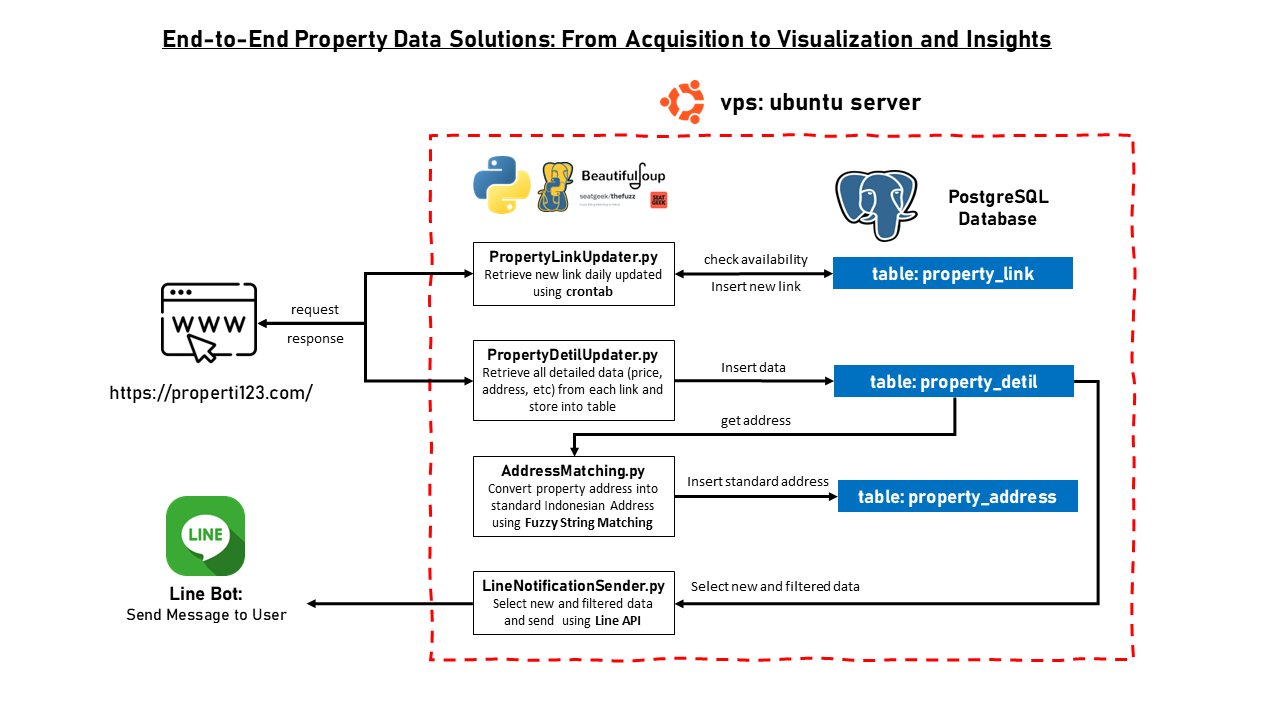

This project delivers a complete end-to-end solution for automated property data management, built to transform raw,

scattered listings into clean, structured, and insightful datasets. Running on a VPS Ubuntu server, the system

automates web scraping, processes and standardizes property information, and stores it in a PostgreSQL database

—making it ready for downstream analytics or direct user notification via the LINE Messaging API. It reflects strong

capabilities in system design, Python scripting, data normalization, and deployment in a production-like environment.

The solution was engineered to address real-world challenges such as inconsistent data from multiple sources, lack of

standard address formatting, and the need for real-time updates. By combining Python (with BeautifulSoup and TheFuzz),

fuzzy matching, job scheduling with crontab, and messaging automation, the pipeline ensures both accuracy and timely

delivery. This project demonstrates not only my technical proficiency but also my ability to design scalable

data workflows that deliver business value—skills directly applicable to data engineering,

automation, and backend-focused roles.

Below is the schematics graph for reference:

Key Features

- 1 Automated Web Scraping

- 2 Robust ETL pipeline for data processing

- 3 Comprehensive data validation and cleaning

- 4 Scalable database architecture

- 5 Advanced analytics and reporting

- 6 Scheduled data refreshes and updates